Like Chess and Go, All of Math Is About To Fall

If a task has sufficiently clear success criteria, AI will beat humans at it

It’s been seven months since I asked if Google’s AlphaEvolve counted as the beginnings of recursive self-improvement. That one seems a bit over-bullish in hindsight, so far, but I stand by my bottom line:

More pragmatically, AlphaEvolve has [blah blah blah] and will speed up some of Google’s own machine learning training — including for AlphaEvolve itself — by about 1%.

I think scoffing at that might be like shrugging off the first few hundred infections of the Covid pandemic. But we’ll see. Hitting a wall is still very much on the table. My question is whether that happens before or after all of math falls.

As for that last question, I’m gradually concluding that it’s just a matter of time. Yesterday OpenAI bragged about a technical paper, published on the arXiv also just yesterday, in which GPT-5.2 (released, you guessed it, yesterday) did all the intellectual work. The humans posed an open problem to it, the AI solved it, and the humans merely verified it and transcribed it. Well, the humans surely coaxed it along, but not by providing technical insights, so they claim.

How many grains of salt to take that with? We’ve seen plenty of similar-sounding claims. Earlier this month we got what was supposedly the first AI-generated theoretical physics paper. Quantum computing expert Scott Aaronson thought it looked good enough on first glance but, after someone else did a deeper diver, Aaronson concluded the paper was worthless. (How hard it is to tell the difference is a separate problem, discussed in the deep dive as well. The era of science slop, the author calls it.)

So I don’t have a strong prediction on this new paper in particular yet. I’m certainly not inclined to take OpenAI’s word for it. I do have a pretty strong prediction that genuine instances of this are coming within the next couple years. What that leads to, I don’t know. It continues to blow my mind what AI is capable of today.

Speaking of which, another (easy) prediction I made seven months ago was that AI was on the cusp of crushing me personally at all math. I’m sad to report that it sure seems like that has happened. To be sure, I’ve posed the question on Manifold. The challenge I’ve laid down is to find a math problem I personally can solve that none of the frontier models (currently GPT-5.2, Claude Opus 4.5, and Gemini 3 Pro) can.

As of this writing, the market is brand new and showing only my own initial estimate of 67% that no such problem can be found. That any math abilities I liked to think I had have been rendered officially useless. Ouch.

Back in May already, with GPT o4-mini-high, it was getting hard to find problems where I had an edge. I included one as a footnote on the AGI Friday about AlphaEvolve. That one’s still not a complete slam dunk for AI, but almost. All three frontier models are tricked by a seemingly solid answer.1 Sadly for me, I would’ve been equally tricked by it. If you then told me that it’s possible to do better, I would’ve eventually had the lightbulb moment. Well, it turns out for all three frontier LLMs, that’s all they need to hear as well.

Random Roundup

In self-driving news, and relevant to the AGI Friday from two weeks ago, I was surprised to learn that Rivian is going all-in with custom AI chips and Lidar sensors.

Not quite news but I had missed it when it happened on November 6: Elon Musk seems to have straight up admitted that, as of a month ago, Tesla had not cracked unsupervised autonomy:

Now that we believe we have full self-driving / autonomy solved, or within a few months of having unsupervised autonomy solved... We’re on the cusp of that — I know I’ve said that a few times but we really are at this point and you can feel it for yourselves with the 14.1 release.

That’s a huge reversal, much as he may weasel and pretend it isn’t. See for example my coverage of Tesla’s supposed first autonomous delivery.

Last thing on self-driving, and to be slightly less cynical, I am starting to believe that Tesla is in fact close. The other day Musk actually tweeted that FSD version 14.2.1 will sometimes let you text and drive. 😱 To be particularly charitable to Musk, and as Musk argued (see 1h 9m 31s) in the shareholder meeting, people are currently turning off FSD in order to text while driving, so it’s safer for FSD to be less strict about that. (I have huge problems with just accepting that people text and drive in non-self-driving cars — and everyone I know is conscientious about this — but regardless, it’s at least plausible that Tesla is saving lives on net at this point. We just need far more transparency from Tesla to be sure.)

Zvi Mowshowitz on why it’s bad for the US to allow the export of Nvidia chips to China.

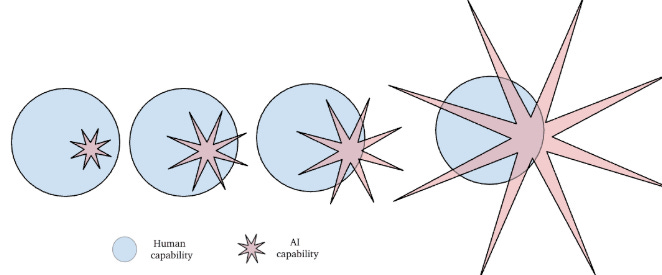

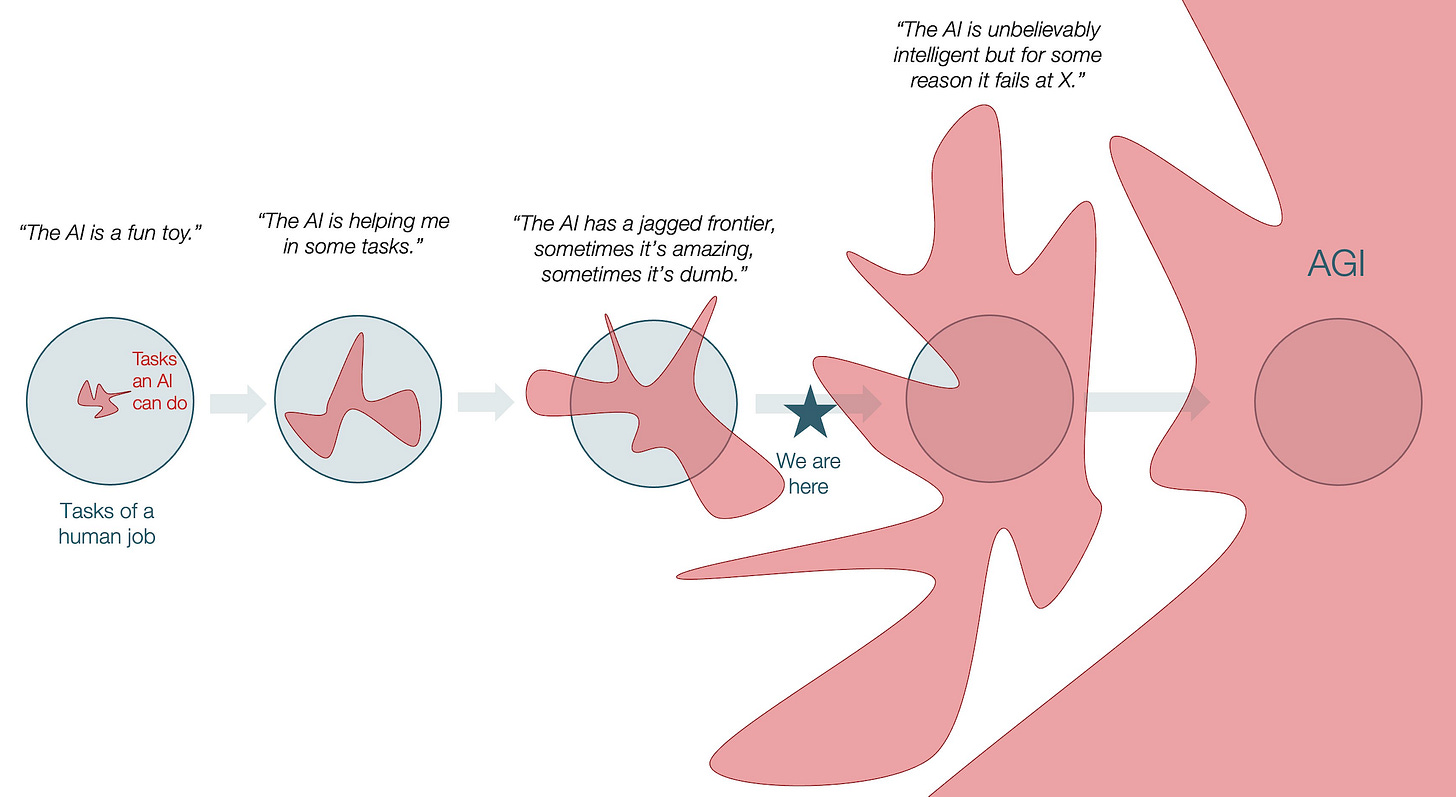

I like these jaggedness diagrams showing possible ways the future of AI capabilities may play out:

I think a lot of people are wildly overconfident that one of the two of those diagrams is roughly reflective of reality. (I just believe that the second diagram is likely enough for us to be thoroughly freaked out.)

Hank Green does an impressive deep dive on the question of how much water AI uses. I don’t believe he’s contradicting anything from a post by Andy Masley I previously linked to which quasi-debunks the claims that it makes any sense to worry about this.

Here’s the puzzle again:

Chuck and Swarna are at a perfectly circular lake in the wilderness. Swarna is swimming. Chuck is on the shore, and can’t get in the water, because he is carrying a chainsaw. Swarna wants to get out of the lake, but doesn’t want Chuck to be next to her when she emerges, because he is carrying a chainsaw. Once she’s on land, she can outrun him, because he is carrying a chainsaw. But even with the chainsaw, he can still run faster than she can swim. Call Chuck’s speed c. Not the speed of light, this is all non-relativistic.

In terms of c, how fast does Swimming Swarna need to be able to swim to escape Chainsaw Chuck?

And the seemingly solid answer is that Swarna should swim out from the center just far enough that she can swim in a circle slightly faster than Chuck can run along the shore. Then, after swimming that circle until Chuck is exactly opposite her, she makes a beeline for the shore. That’s close but she can do better!

Brilliant framing on the 'clear success criteria' angle there. The distinction between AI solving problems versus coaxing it into the lightbulb moment actually gets at something I've been noticing in production enviroments: the feedback loop is everything. Once verification becomes cheaper than generation, we're basically in a diferent game where humans curate rather than create. Honestly makes me wonder if the Aaronson debacle isn't just preview of what peer review looks like when slop is indistinguishable from substance at first glance.