Tesla Robotaxis vs Human Drivers (and Waymos and Zooxes)

In which we go spelunking in the raw data and conclude that... we know nothing except that Waymo crushes everyone

(AGI Friday is now one year old! See the first item in the Random Roundup below for a quick retrospective on my take on AI risk from a year ago.)

Last week I mentioned a claim in the media that Tesla robotaxis are worse drivers than humans. Since no one writing about Tesla can be trusted, I’ve been digging into the raw data to try to learn the truth. Not that you can trust me either. I’ve kind of established myself as a Tesla skeptic and Waymo superfan. But I think if you look back at even my posts that sound like the most ridiculous cope, like “Nooo, This Doesn’t Count, Tesla Is Faking It”, they hold up surprisingly well. I’m working hard to be objective about this.

When I say raw data I mostly mean the National Highway Traffic Safety Administration (NHTSA) data on traffic collisions (“incidents”) occurring from 2025 June 15 to December 15 for unsupervised autonomous vehicles.

There are a dizzying number of assumptions we have to make in order to reach any conclusions. Except for Waymo, which is remarkably transparent and for which we have plenty of data. Let me point you again to Kelsey Piper’s excellent analysis, which has these conclusions:

Waymos are at least 2x less likely than humans to have any kind of collision

Waymos are 5x less likely than humans to have a crash with any injuries

Waymos are 5x less likely than humans to have an airbag-deploying crash

Waymos are 10x less likely than humans to have a crash with serious injuries

Waymos are, as a low lower bound, 3.2x less likely than humans to have fatal crash

That 2x is likely way too low, for the simple reason that all the robotaxi companies are (if they’re following the law) reporting every collision, no matter how minor — even bumping into stationary objects. We only ever learn about human drivers’ collisions when an insurance claim or police report is filed. So probably ignore the 2x. Likewise, the 10x is arguably biased against humans because, well, Waymos don’t always have a human occupant at all, so no one to get injured. And the 3.2x difference for fatalities is almost meaningless because of lack of data, thankfully. Waymo has famously, with going on 200 million autonomous miles, never killed anyone.

We’re able to get a number on fatalities at all due to two incidents. In one a reckless human driver hit a line of stationary cars, one of which was a Waymo. In another a motorcycle rear-ended a Waymo yielding to a pedestrian and the motorcyclist was subsequently killed by a third vehicle. So the Waymo not only wasn’t at fault either time but had no way, even in theory, to prevent those tragic outcomes. But the idea with this data is to ignore fault and just distribute the attribution of the fatalities equally among all vehicles involved.

The meaningful Waymos-vs-humans numbers are the ones for crashes with injuries and airbag deployments (the latter having the advantage that airbags deploy with or without humans in the car). Such accidents are still at least somewhat underreported by human drivers. Also the streets where Waymos mostly drive (like downtown San Francisco) are more dangerous than average. So it’s safe to say that Waymos are at least 5x safer than humans.

Alright, back to Tesla and the dizzying array of assumptions. In particular, we’ll assume Tesla is not using remote operators and that the passenger-seat safety monitors never intervene in real time. I’ve been skeptical of this, but, by reporting their incidents to NHTSA, Tesla is averring that they’re at level 3+ autonomy with no human operator, in-vehicle or remote. So, ok, we’ll tentatively believe them while stockpiling popcorn in case a scandal breaks.

So what are these incidents? There are 9 of them in the 6-month period for which NHTSA provides data. For 4 of those 9, the robotaxi was going 2mph or less. And only 1 involved (minor) injuries. Here are what I believe to be the most complete characterizations of the incidents we can get from the NHTSA data:

July, daytime, an SUV’s front right side contacted the robotaxi’s rear right side with the robotaxi going 2mph while both cars were making a right turn in an intersection; property damage, no injuries

July, daytime, robotaxi hit a fixed object with its front right side on a normal street at 8mph; had to be towed and passenger had minor injuries, no hospitalization

July, nighttime, in a construction zone, an SUV going straight had its front right side contact the stationary robotaxi’s rear right side; property damage, no injuries

September, nighttime, a robotaxi making a left turn in a parking lot at 6mph hit a fixed object with the front ride side of the car, no injuries

September, nighttime, a passenger car backing up in an intersection had its rear right side contact the right side of a robotaxi, with the robotaxi going straight at 6mph; no injuries

September, nighttime, a cyclist traveling alongside the roadway contacted the right side of a stopped robotaxi; property damage, no injuries

September, daytime, a stopped robotaxi traveling 27mph [sic!] hit an animal with the robotaxi’s front left side, no injuries [presumably “stopped” is a data entry error]

October, nighttime, the front right side of an unknown entity contacted the robotaxi’s right side with the robotaxi traveling 18mph under unusual roadway conditions; no injuries

November, nighttime, front right of an unknown entity contacted the rear left and rear right of a stopped robotaxi; no injuries

All the other details are redacted. I guess Tesla feel like they have a lot to hide? The law allows them to redact details by calling them “confidential business information” and they’re the only company doing that, out of roughly 10 companies. Typically the details are things like this from Avride:

Our car was stopped at the intersection of [XXX] and [XXX], behind a red Ford Fusion. The Fusion suddenly reversed, struck our front bumper, and then left the scene in a hit-and-run.

I.e., explaining why it totally wasn’t their fault, with only things that could conceivably be confidential, like the exact location, redacted. So I don’t think Tesla deserves the benefit of the doubt here but if I try to give it anyway, here are my guesses on severity and fault:

Minor fender bender, 30% Tesla’s fault (2mph)

Egregious fender bender, 100% Tesla’s fault (8mph)

Fender bender, 0% Tesla’s fault (0mph)

Minor fender bender, 100% Tesla’s fault (6mph)

Minor fender bender, 20% Tesla’s fault (6mph)

Fender bender, 10% Tesla’s fault (0mph)

Sad or dead animal, 30% Tesla’s fault (27mph)

Fender bender, 50% Tesla’s fault (18mph)

Fender bender, 5% Tesla’s fault (0mph)

Those guesses, especially the fault percents, are pulled out of my butt. Except the collisions with stationary objects, which are necessarily 100% Tesla’s fault. If we run with those guesses, that’s 3.45 at-fault accidents. But since we have no confidence in the at-fault percentages, let’s do what we did with the Waymo fatality data and just count everything regardless of fault. That’s at least apples-to-apples between the different companies.

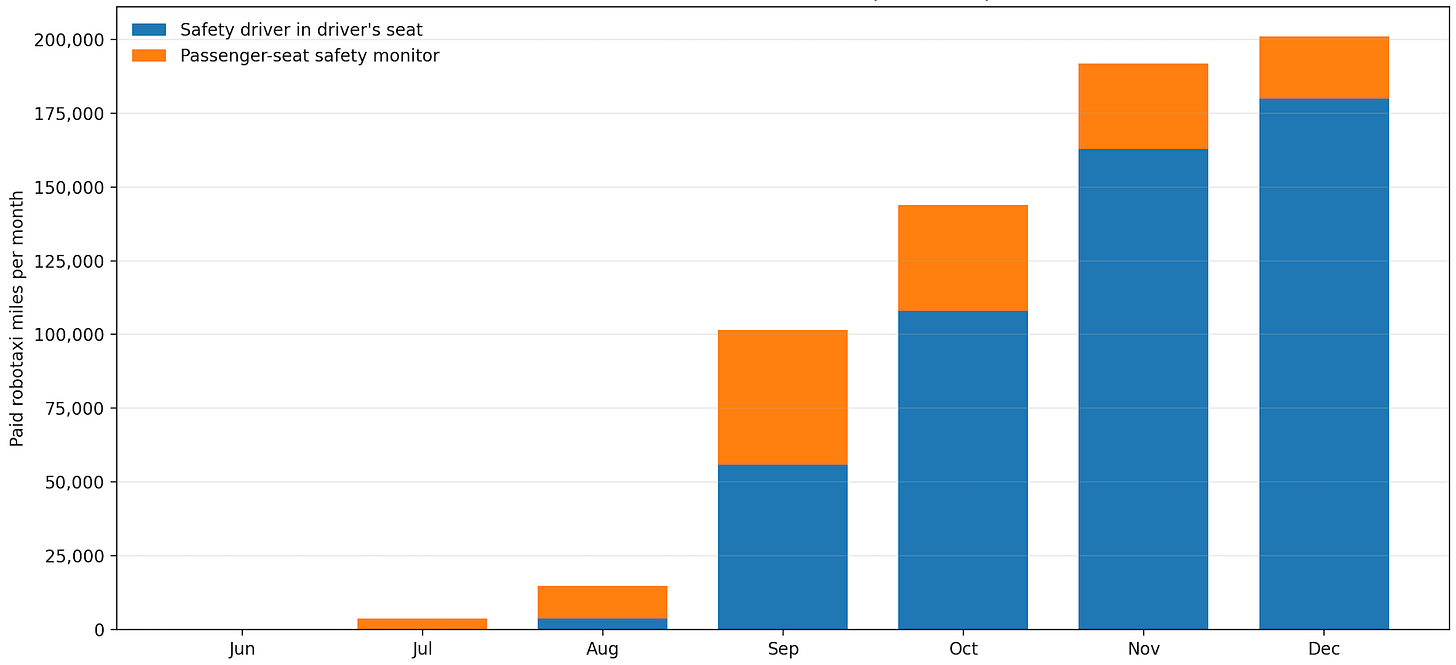

Now comes the hard part. We can tally these incidents, but what’s the denominator? Over how many miles did they happen? I believe that most Tesla robotaxi rides have had an empty driver’s seat. But starting in September, Tesla added back driver’s-seat safety drivers for rides involving highways. Or more than just those? We have no idea. We do know of cases of Tesla putting the safety driver back when the weather was questionable. In any case, only accidents without a safety driver in the driver’s seat are included in this dataset, so we do need to subtract those miles when estimating Tesla’s incident rate.

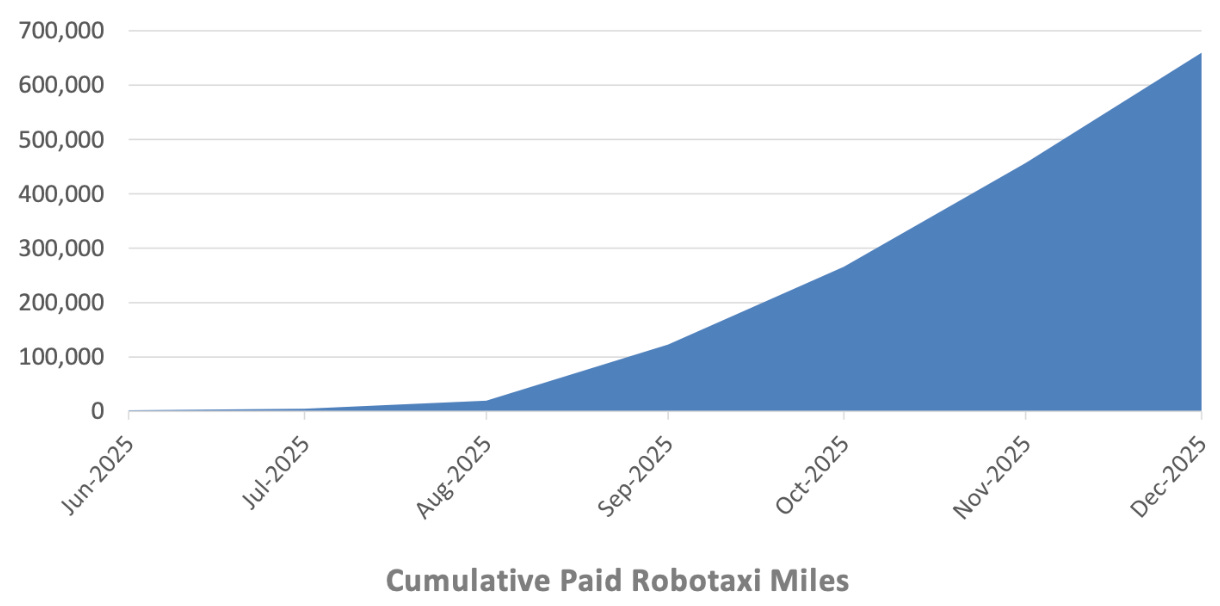

Tesla makes this as hard as possible to estimate. Here’s what they’ve published:

But that (disingenuously, I’d argue) lumps together San Francisco Bay Area miles, which are supervised, with Austin miles, which, giving Tesla a lot of undeserved benefit of the doubt, are unsupervised. Apparently Tesla has 500 robotaxis across the Bay Area and Austin and 50 in Austin. Naively, that means 90% of the robotaxi miles Tesla’s touting are normal Uber-style trips with the driver using FSD (possibly with impressively low disengagement rates but as far as I know Tesla isn’t sharing that data). If I try to get the robominions to make a mileage graph that incorporates all that, we get this:

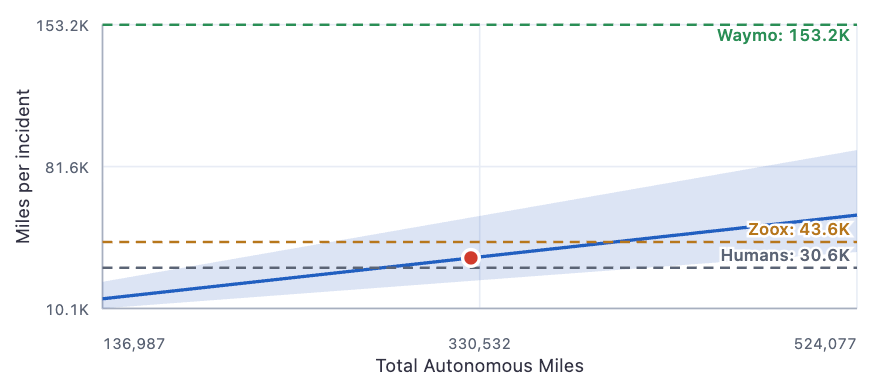

The sum of the orange bars (interpolating for December so we end when the NHTSA data ends on December 15) is 135,562 miles. Or we could trust the crowdsourced Robotaxi Tracker which infers that Tesla robotaxis have accumulated 456,099 miles in Austin. We still need to remove miles in which the robotaxi had a safety driver in the driver’s seat. A lot of guessing is required. Here’s my conclusion:

Depending on that denominator — total autonomous miles traveled — Tesla’s possible safety record ranges from subhuman to a bit better than Zoox, with a decent chance of being somewhere in between the two. All 3 are far worse than Waymo. Note that the humans here, to keep all the comparisons apples-to-apples, are hypothetical ones that report every incident no matter how minor. We’re, somewhat handwavily, inferring this from the 5x safety multiplier of Waymos over humans.

Note also that all these numbers are pessimistic because we’re only counting paid miles, but the incident database includes testing and so-called deadhead miles, where the robotaxi is empty in between fares. But since that’s consistent across companies, the comparisons should be fair.

I fear that all I’ve accomplished here is rationalizations for both Tesla bears and Tesla bulls to feel vindicated.

Random Roundup

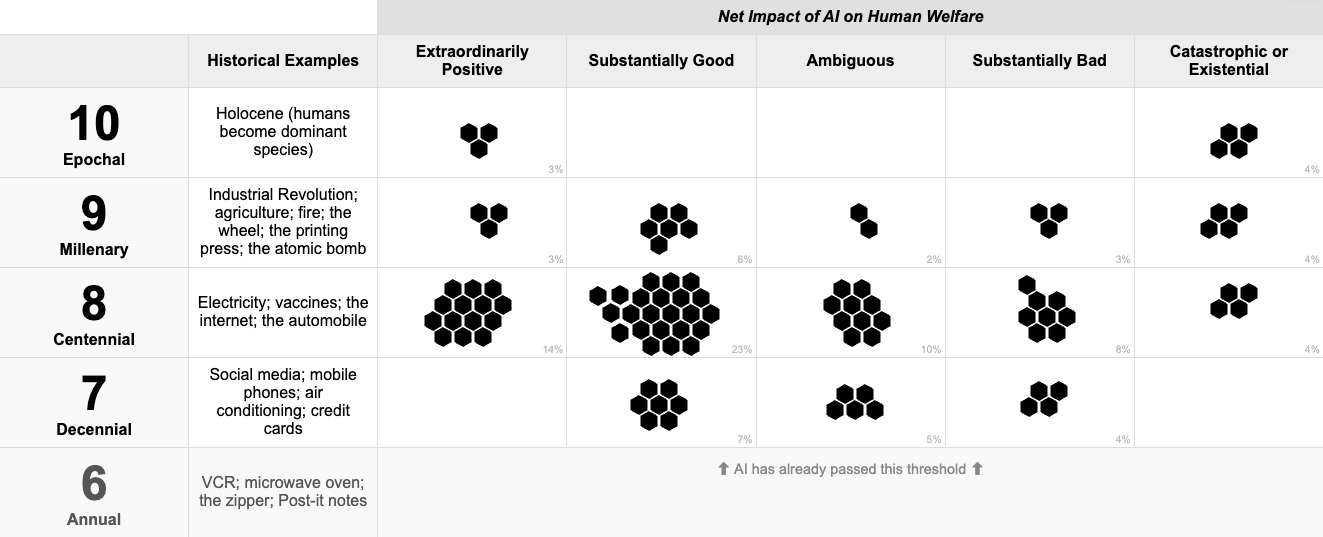

It’s been one full year since I wrote about AI Risk and the Technological Richter Scale. I think it holds up? My probability of AI plateauing at a level where it’s merely the most important tech of the decade (“7-Decennial”) has gone way down. But also my probability of “10-Epochal”, where artificial superintelligence is to humans as humans are to animals has gone down. But only because the 3.9 years remaining till 2030 feels too short for that to play out. I see it as a potential outcome on the table in any case. I now put it at 80%+ probability that we hit “8-Centennial” (with electricity, vaccines, the internet, the automobile) or higher this decade. Maybe something intermediate between 7 and 8 deserves a fair bit of probability mass. If I use my Tech Richter Scale tool today, here’s where I’m at:

But I still go back and forth on this almost by the day. See also my thinking on this from a month ago where I said I was confident AI would exceed a “7-Decennial” before it plateaus.

My friend Linch Zhang makes the case for worrying about AI catastrophe.

Everyone I know continues to (correctly) lose their minds over how powerful Claude Code and kin have become and how fast they’re improving — just two months between palpable upgrades most recently. It’s all too easy to err in either direction here. Many people over-index on how good AI was the last time they tried it. But people like me are prone to the other extreme, getting ahead of ourselves in anticipation of what feels like it will soon be possible. One thing driving this divide in opinions is the difference between the paid and free chatbots. AI skeptics are disinclined to pay for fancier chatbots. So today Scott Alexander is trying an experiment: an “AMA (Ask Machines Anything)” post in which AI skeptics can ask their own questions that they expect to require genuine expertise. Scott is feeding those questions to the fanciest LLM (Claude Opus 4.6) and posting the answers. I’m happy to do the same experiment here if there are any AI skeptics in the audience. (What would be especially fun would be to run this like a Turing test, but I’m not sure that finding enough human expertise is realistic. It defeats the point of the Turing test if people can clock the AI because it’s the one with the more thorough and correct answers.)