AI Risk and the Technological Richter Scale

Let's drag some hexagons around to predict the future

What I’m most confident of, AGI-wise, is that anyone who’s extremely confident is wrong. Every one of the branches in this flow chart (from Scott Aaronson and Boaz Barak’s Five Worlds of AI) is a live possibility:

(For those out of the loop, Singularia refers to a positive technological singularity — think curing death and colonizing the galaxy — and Paperclipalypse refers to the opposite, where a superintelligence pursues goals incompatible with human life. Canonically, tiling the universe with paperclips.)

What’s terrifying is how hard it is to push Paperclipalypse’s probability below, say, 1%.1

Of course these probabilities depend a lot on the timescale we’re talking about. But what’s still terrifying is that at the moment it’s looking almost like a coin flip that we hit AGI by the end of this decade. I have the following Manifold market with one way of posing that question:

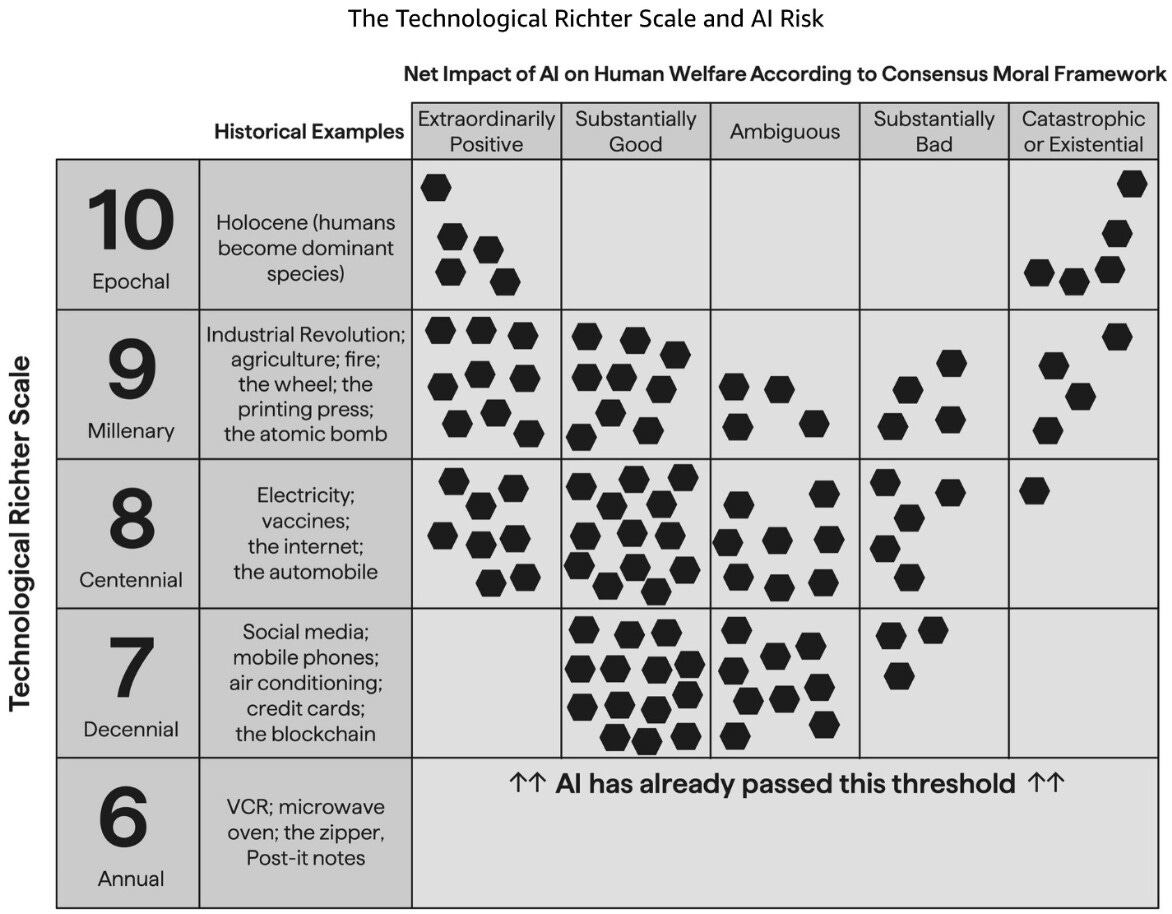

For a more fine-grained version of this question, here’s a chart from Nate Silver’s new book:

For the less visual among us, it’s basically laying out a 2-dimensional space with Impact on one axis and Goodness-vs-Badness on the other axis and then thinking about how to distribute the probability. (Also please ignore “blockchain” in level 7; that’s silly. Or least we’d need a whole other technological richter scale predictor for that.)

Again, how the probabilities are distributed depends heavily on the timescale. If we give it a few decades, I don’t think there’s much probability mass left at level 7. So let’s keep restricting ourselves to the 2020s. I actually asked an AI2 to make me an interactive version of the above chart. Here it is if you want to try:

It’s pretty agonizing but here’s my first pass, again focusing on what I expect at the end of 2029. That moves a lot of probability mass downwards compared to Nate Silver’s version above:

There are a few weirdnesses about that distribution that I should explain. If we go past level 8, we’re probably talking about recursive self-improvement, which means we won’t likely stop at level 9. And in that case the outcome is either extremely good or extremely bad. Unfortunately skewed towards bad, for reasons I expect to expound on later. For anything short of utterly transformative AI, I’m a tech-optimist. If AI’s impact doesn’t go past the level of electricity / cars / the internet then I expect that to be a huge boon for humanity, though bad outcomes are also possible.

Even a 1% chance of the literal end of the world would be terrifying. But if we did push it down that far, that would put it on a level with a list of other existential risks. To be clear, my belief is that we’ve not pushed the probability down quite that far and AI is in fact at the top of the list of existential threats to humanity.

Specifically Codebuff. Disclosure: That’s a referral link (we’ll both get free credits if you sign up) and also I’m kind of indirectly an angel investor. Asking an AI to write a complete app and having it (sometimes) go off and do it continues to be mind-blowing. Though for now I’d say you kind of have to be a programmer yourself to know how to help it when it gets confused, or sometimes what to ask for in the first place. Or just to have the patience to help it fix its own bugs, whether or not you understand the code yourself. This will be a good topic for a future AGI Friday.

PS, I think it's important to set the stage this way before I start spouting more opinionated opinions. My thinking is that it's very easy to cry wolf and lose credibility. Scott Alexander has a beautiful post about this: https://www.astralcodexten.com/p/against-the-generalized-anti-caution

So I want to emphasize that the reason to freak out about AGI is the uncertainty. The inability to rule out all the catastrophic scenarios.