AI 2027 and Predictions Going Back to 2008

Why AGI in 2027 or 2028 is not as implausible as it seems (and 30 years is seeming a safe upper bound)

This newsletter is all about predicting the trajectory to AGI (and sometimes, like last week, playing with new toys). This week I’ve been thoroughly outdone by a team including Scott Alexander. They’ve published AI 2027, an interactive narrative for how the march to AGI could play out in (what I think is) the most aggressive possible timeline.

(Also Scott Alexander went on a podcast for the first time ever to promote this. Kermit-the-frog-paroxysmal-arm-waving-emoji. Oh, and it was covered in the New York Times.)

If you’re not sure why you should put any stock in those people’s predictions, one of them, Daniel Kokotajlo, went through a less formal version of this exercise in 2021, pre-ChatGPT, and it was pretty impressive. You can quibble with any number of the specifics but it’s remarkably prescient. See for yourself. It steps through each future year starting with 2022. It’s fairly evenly split between predictions that were too bullish and those that were too bearish. Overall it feels like it’s about as good as it was possible to do from what we knew in 2021. The extrapolations on training compute and how far scaling up would take us are particularly accurate.

Unlikely Gary Marcus, who just repeats year after year that scaling is about to hit a wall, Kokotajlo predicted the current seeming diminishing returns to scaling to the year (ish). Foreseeing chain-of-thought (“bureaucracies”) was also impressive.

As I’ve mentioned in past AGI Fridays, it wasn’t until 2022, when I saw Google’s PaLM paper, about the first language model that exhibited common sense reasoning and could explain jokes, that I realized timelines to AGI might have shrunk. And I didn’t even really believe it till I tried it myself with GPT-3.5 (aka ChatGPT) later that year. In 2021, with GPT-3, I was still pooh-poohing large language models as generating weirdly plausible text but with no real understanding behind it. Scott Alexander, by contrast, in 2019 when GPT-2 came out, immediately called it a wake-up call in terms of AGI progress.

I haven’t been a complete idiot with my own predictions though. In 2008 I bet $10k against Anna Salamon’s $100 that AI would not pass the Turing test by 2018. I was pretty dead certain it couldn’t happen that soon. In fact, we agreed on a strict version of the Turing test that even now, AI can’t pass:

(I put all the dialog and documentation of our wager at doc.dreev.es/turingbet for posterity.)

In 2016 I had a wager with Eliezer Yudkowsky about AlphaGo beating Lee Sedol at Go. Eliezer put the probability of a win for AlphaGo at less than 60% and I liked those odds. Here’s the key excerpt:

Excellent! This is a virtual handshake that [Eliezer] will pay [Danny] $67 if AlphaGo wins and [Danny] will pay [Eliezer] $100 if the human wins.

Of course AlphaGo famously won, but it was close (at the time). Now there’s no such thing as humans getting anywhere near AI at any board-game or card-game style game. [EDIT: Oops, that’s an exaggeration, at least in 2025 so far. See discussion in the comments. Huge thanks to Matt Rudary and other readers keeping me honest here.]

The Singularity (or at least Recursive Self-Improvement)

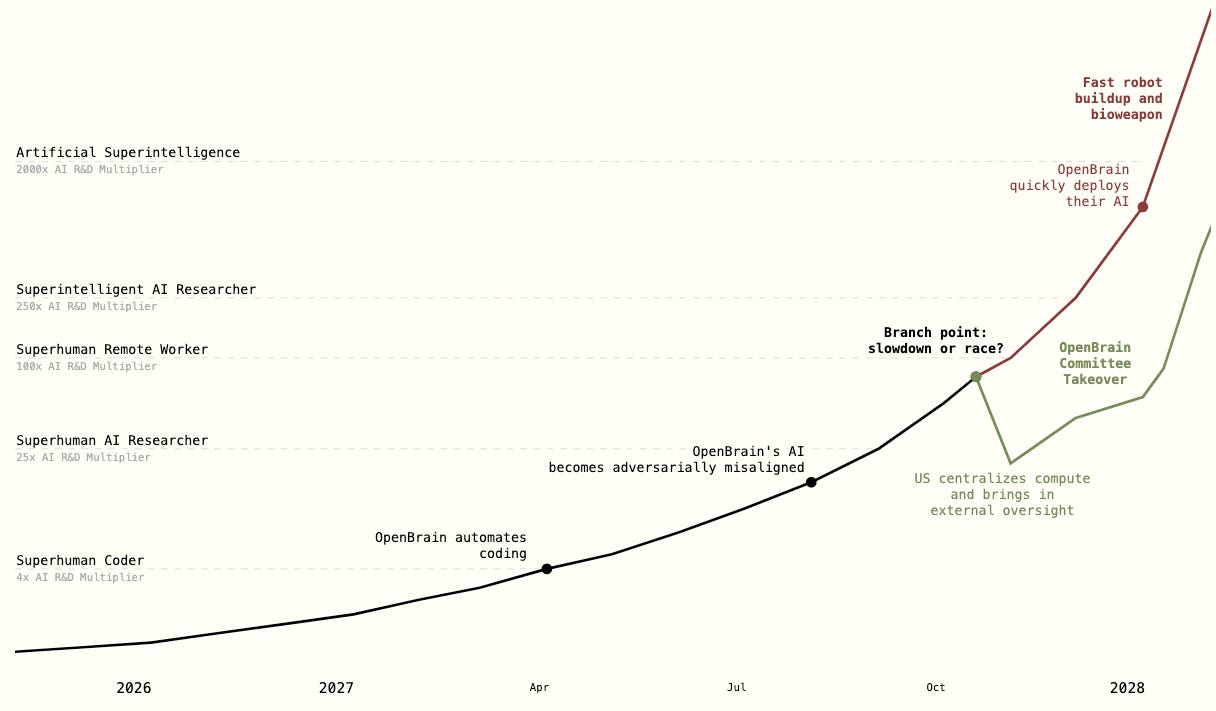

I haven’t made it far enough into the tour de force that is the AI 2027 document to go into detail yet but the crux is that we’re (plausibly) within a couple years of automating the coding that frontier labs do as part of their AI research, and things spiral fast once that happens:

(The main AI company in the fictionalized scenario planning from AI 2027 is called “OpenBrain”.)

One more 2016 prediction: My PhD advisor, Michael Wellman, put his cards on the table with his 80% confidence interval of 2040-2100 for AGI in a talk he gave back then. My own confidence interval got a bit wider than that before it shrunk again. By 2021 I was at 2040-2140, before my head belatedly exploded in 2022.

This Week in the News

All I had time for was AI 2027 this week, ok?

Ok, fine, I can link to Scott Alexander talking about it at least

And, twist my arm, if you’re up for hearing two brilliant people argue for 4 hours about whether AGI is 3 years or 3 decades away, a podcast with Ege Erdil and Matthew Barnett promises to be edifying. Also it’s kind of wild that 30 years is pretty much the most bearish possible estimate at this point. Still within most of our lifetimes!

Hey, catching up on AGI Friday after a little while away. I just wanted to respond to this point:

> Now there’s no such thing as humans getting anywhere near AI at any board-game or card-game style game.

In fact, the state of the art of AI in the card game bridge is hot garbage. Robots are truly bad at the game. Bridge has three main aspects of play: bidding, defense, and declaring. The robots are bad at all three, but worst at bidding.