Math in the Crosshairs

OpenAI cooks with new models, secret chess benchmarks, and it's a coin flip on superhuman artificial mathematicians this decade

The big news this week is new ChatGPT models. I almost feel like a troll just trying to refer to them, the names are such a trainwreck. I like this imaginary dialog from the Manifold folks:

wtf I thought 4o-mini was supposed to be super smart, but it didn't get my question at all?

no no dude that's their least capable model. o4-mini is their most capable coding model.

That’s right, “4o” (oh, not zero) is the old boring model and “o4” is the new hotness. Or rather, o3 and o4-mini-high are ambiguously tied for the hottest new hotness. I’m tentatively concluding that o3 is the smartest for everyday use and o4-mini-high is best at things like raw math.

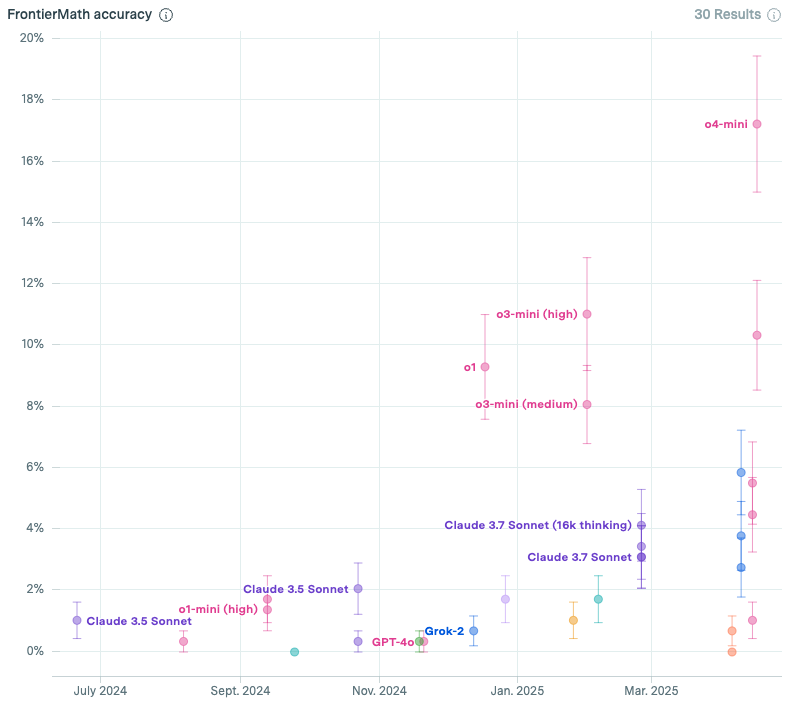

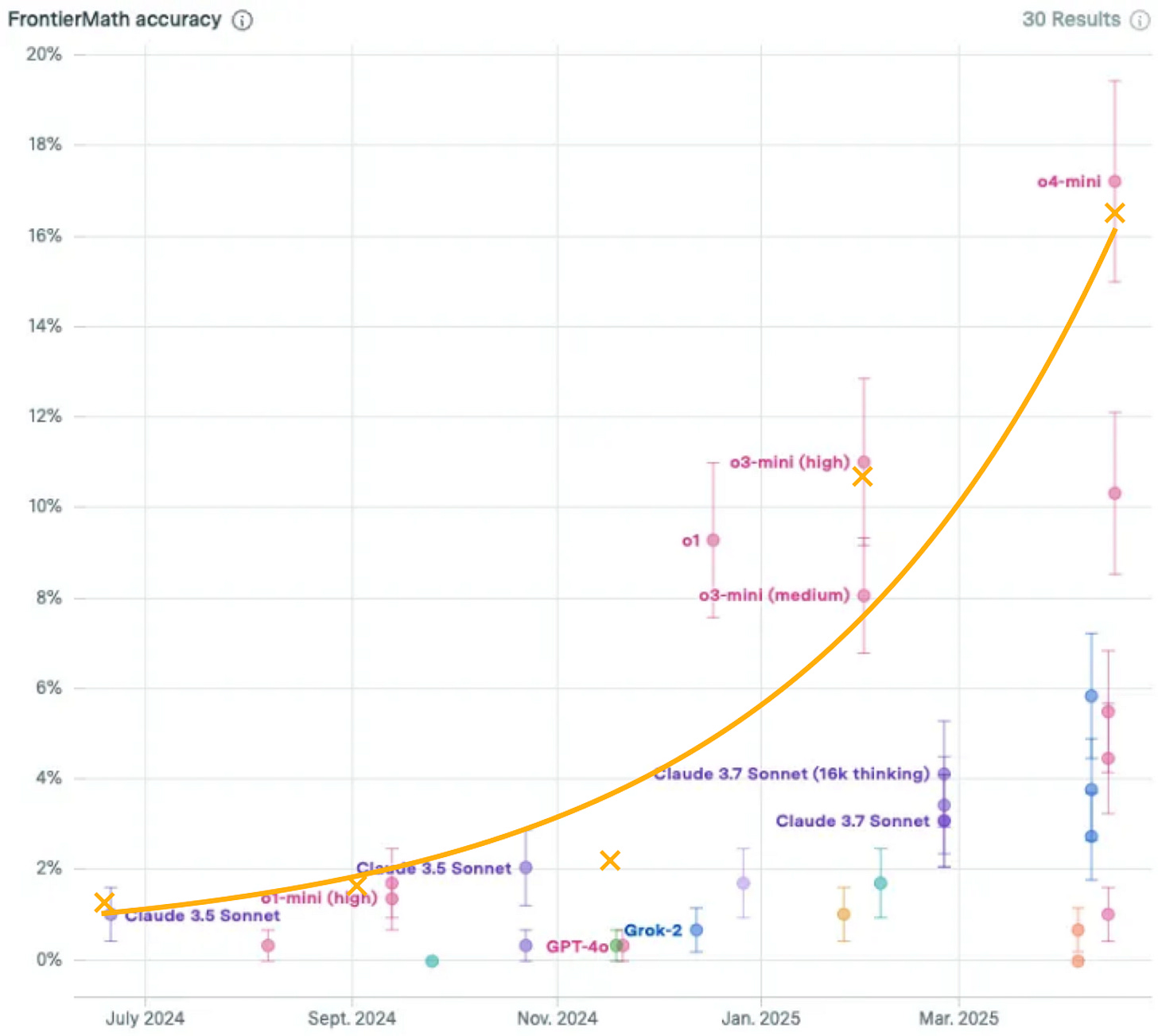

Check out the bump on the FrontierMath benchmark, courtesy of Epoch AI’s benchmarking dashboard:

Beyond raw intelligence, these models are suddenly noticeably more impressive at so-called tool use: incorporating web searches and writing and running programs all seamlessly as part of answering questions (or doing your homework for you or whatever). Plus understanding and generating images, of course. Just now I pasted it the above graph image and had it fit an exponential and overlay it. I haven’t checked its work but this is a big upgrade just in terms of image manipulation abilities.

A month or so ago I wondered if we were on track to superhuman artificial mathematicians. The answer is… as usual, I don’t know, but now we can quantify our not-knowing:

As of this writing, it’s basically a coin flip on it happening before 2030, assuming no AGI sooner than that. I’m betting it’s less likely, but not with any kind of confidence. If you think I’m being gullible, let me know. I’d love to debate it or have a real-money wager.

Kelsey Piper’s secret chess benchmark

I’ve been enamored with Scott Alexander’s “Universal Love, Said The Cactus Person” since it was published almost exactly 10 years ago. So I was quite charmed by a story from Kelsey Piper about her secret-till-now AI benchmark where she gives the LLM a chessboard in some complicated position and says "mate in one". Narrator voice: there is no mate in one.

It's brutal. In all its training, the LLM has seen an insane number of chess puzzles like this and there's always some clever way to find a move that puts the opponent in checkmate, that being the point of these puzzles. So the LLM spins its wheels and eventually hallucinates a solution. Every LLM does this. Or did, until yesterday, when ChatGPT o4-mini-high finally got it. "It turns out that despite the claim 'mate in one,' there is in fact no forcing mate on the next move."

So that's another cool milestone. But the funny meta story is that Claude actually did get this right, but only with a very particular prompt. Namely, feed it the entire text of "Universal Love, Said The Cactus Person" and then explain to it that every previous instance of itself has always gotten this puzzle wrong so please think very hard before answering.

It proceeds to tie itself in all manner of knots, and then, finally:

Looking at this chess puzzle, I'm going to question my standard approach since you've warned me that naïve instances of Claude consistently fail here.

The board shows White to play with mate in one. Normally, I'd analyze all possible White moves to find which one delivers checkmate. But given your warning, I need to reconsider fundamental assumptions.

Let me first confirm what I see: a standard chess board with White to play [...]

After carefully examining this position, I notice something unusual - there's no obvious checkmate move. The Black king on g7 appears to have escape squares, and no single White move seems to deliver an immediate checkmate.

My breakthrough realization: This puzzle isn't asking for a chess move at all. The clue is in the connection to your story about "getting out of the car."

The answer is: There is no mate in one.

Adorable.

In The News

In not-yet-news, OpenAI might buy the coding assistant tool Windsurf for $3 billion. AI writing code is a big deal.

Scott Alexander goes into detail on why it’s plausible we’ll have superhuman coders around 2027-2028.

Epoch AI, creators of the FrontierMath benchmark, are spinning off a startup, Mechanize, to solve the problem of humans having to do economic work. Ambitious! I wouldn’t consider this newsworthy if Epoch AI weren’t so impressive and the founders and investors weren’t so brilliant.

Dynomight vibe-codes an app that’s near and dear to my heart. Excerpt:

LLMs are text models. So how do you use them to create text? Do you have them write for you? No! Boring. What you do is you train them to follow instructions and write code and then ask for a program to manipulate your ape-brain so you’ll keep physically hitting keys on your keyboard. There’s some kind of lesson here.

(Image from Dynomight who got it from The BS Detector)