Gary the Bear Doubles Down

And we hear Leopold the Bull's case for AGI any OOM now

Last week in “Bulls vs Bears” we talked about Gary Marcus, who continues to double down on his prediction that generative AI is hitting a wall any day now. I think my new best argument against Gary Marcus is in his own by-line of his post from yesterday on what a nothing burger GPT 4.5 is:

Gary Marcus was right all along that pure scaling would eventually hit a wall, or at least reach a point of massively diminishing returns.

The cope, as the kids say, is out of control. Note the link to his 2022 prediction — literally the month before Google announced their PaLM model (itself just ahead of ChatGPT launching)! — about how deep learning was hitting a wall.

(But, ok, we may yet hit that wall and then Gary will have the last laugh.)

Google’s PaLM announcement was my holy shit moment:

I strongly suspected that Google was cherrypicking or otherwise cheating and that LLMs could not do what Google was claiming. Turns out I was extremely wrong.

Six years of history

Speaking of me being extremely wrong, some of my friends had their holy shit moment in 2019 with GPT-2. It generated weirdly plausible human-like text with, as far as I could see, no actual understanding. Scott Alexander, as soon as it was released, called it a wake-up call in terms of AGI progress and evidence against the presumption that AGI wouldn’t happen by scaling up existing architectures. The next year, in 2020, GPT-3 had some understanding but, like Gary Marcus, I was still failing to extrapolate forward. Then there was Google’s PaLM but we had to take Google’s word for that. Finally, later in 2022, OpenAI released ChatGPT (GPT-3.5) and the whole world had their holy shit moment. Except Gary Marcus of course, who, as we talked about last time, predicted that that was as much as you could milk out of these things. Of course, another year went by and we got GPT-4 and Claude, which were markedly smarter, in 2023. Then it felt like there was a brief lull but in retrospect there’s been yet another quantum leap in AI capability: bigger context windows, multi-modal input and output, big reductions in cost, and now reasoning models.

Leopold Aschenbrenner and the “any OOM now” theory

OOM means “order of magnitude”, as in multiplying by 10. I’m taking Leopold Aschenbrenner and his tour de force “Situational Awareness” as the bull counterpart to Gary Marcus. Here’s Aschenbrenner’s argument in a nutshell. Consider the amount of training compute devoted to each of the milestones in the previous section. We measure that in FLOPs — floating point operations, basically adding or multiplying two decimal numbers. The number of FLOPs for the latest LLMs is about 1,000,000,000,000,000,000,000,000,000 or 1027. So 27 OOMs. Pretty wild.

Aschenbrenner’s point is that you can look back to 2019 and earlier and every OOM of additional training compute seems to correspond to a noticeable increase in AI capability:

GPT-2 used 1021 FLOPs and was arguably as smart as a preschooler

GPT-3 used 1023 FLOPs and was maybe as smart as an elementary schooler

GPT-4 used 1025 FLOPs and was like a smart high schooler

We keep adding zeros, and are doing so especially quickly now as venture capital pours in, and every time we do, the AI gets smarter. This doesn’t prove we’re about to hit AGI, just that we haven’t exactly hit diminishing returns yet.

(Aschenbrenner’s argument goes further than this. For example, we also keep finding ways to squeeze more out of a given amount of training compute. Like chain-of-thought reasoning models and other tricks — Aschenbrenner calls these tricks “unhobbling”.)

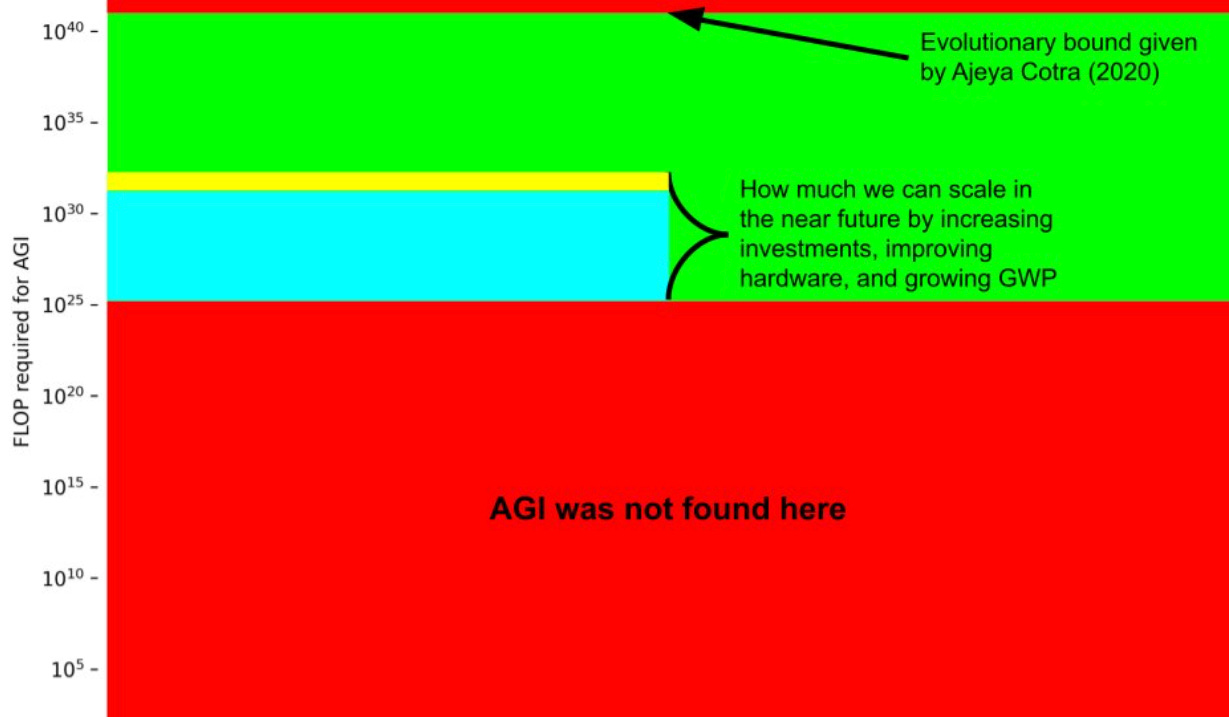

Can we say anything about how many FLOPs AGI requires? Well, there’s a handwavy estimate that evolution put in the equivalent of 1043 FLOPs to make our brains. Even if you don’t take that estimate seriously, it’s believable that at some number of OOMs well before 1043, AGI is going to fall out.

Aschenbrenner cites this illustration from Matthew Barnett to give a visceral sense of the “any OOM now” theory.

In the news

Anthropic released Claude Sonnet 3.7 this week. It’s smarter and better and continues to be what I recommend for normal people.

And my own update about Grok 3: Last week I predicted Grok 3 would soon fall behind the frontier again. Today I’m less sure of that after seeing that Grok 3 seems to be at parity with ChatGPT’s top reasoning models and ahead of Claude Sonnet 3.7 in terms of simple geometric reasoning. We’ll see!

I conducted my own trial of OpenAI’s Deep Research, which I’m posting on the Beeminder blog. It’s about the science of Beeminder but you can ignore the Beeminder-y parts and just use it to get a sense of how useful to expect Deep Research to be for you. My verdict: not exactly useful quite yet. “Let’s See If OpenAI’s Deep Research Can Tell Us about the Science of Beeminder” [Funny story: This went to press linking to the Beeminder forum version of my writeup since the blog version wasn’t quite ready but Beeminder, ironically, made me get this out the door at 5pm Friday.]