Blogception

And a Tesla update, a superhuman math update, and vibe-coding daylight savings

As I blogged on my other blog, I’ll be blogging at you live from Berkeley the next two weeks. The event I’m part of, Inkhaven, isn’t about AI specifically but some of my blogging friends/heroes who are obsessed with AI — Scott Alexander, Dynomight, Scott Aaronson — will be there. The venue, Lighthaven, is kind of the epicenter of Bay Area AI circles. Sam Altman, who I presume is a household name by now, to my medium-sized chagrin, was even there last month for a conference. Again, I’m just going there to help writers set up tools like Beeminder (hot tip: name your blog after a day of the week so you’re compelled to publish something weekly on that day) but AI is sure to be a hot topic.

Tesla robotaxi update

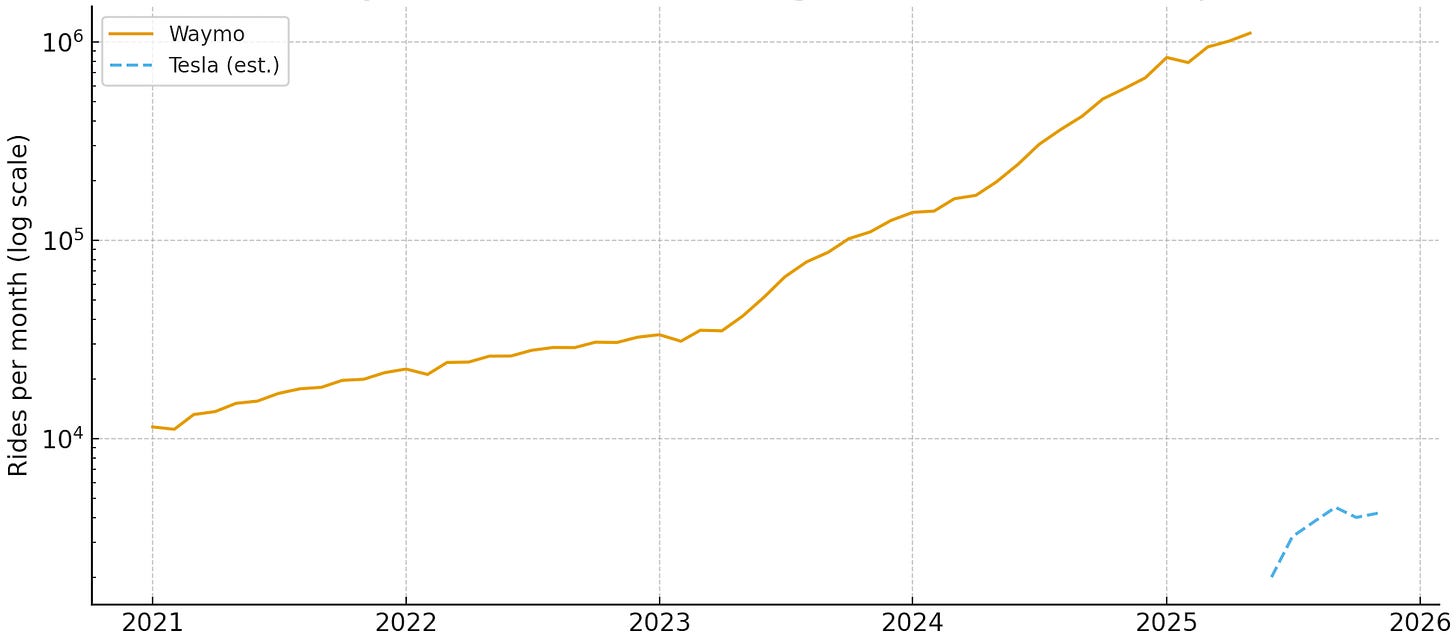

Even if I end up wrong, I continue, with every passing week, to feel gradually less stupid about my prediction from April that Tesla was not serious about its supposed robotaxi launch. That it didn’t count, basically. The passenger-seat safety monitors are still there (Musk promises they’ll be gone by the end of 2025) and there’s been hardly any scaling up from the original dozen or so cars. They manage to give a different impression by introducing ride-sharing services in other cities besides Austin, but those invariably have a backup driver in the driver’s seat. They say that’s for regulatory reasons, but they’re not even applying for the permits to get the backup drivers out. Waymo, of course, has no one in the driver’s seat or passenger seat, including in regulatory-heavy places like California, and they continue to steadily but exponentially scale up.

On the other hand, Tesla can’t be totally faking it. Or at least they clearly think they’re close to being able to stop faking it? They are gearing up for mass production of their Cybercab. It might not even have a steering wheel. It’s not currently legal to make more than 2500 cars without steering wheels per year but Musk has an answer to that: “Once it becomes extremely normal in cities, regulators will have just fewer and fewer reasons to say no”. Maybe! It’s true it’s rapidly about to become normal. Several companies will be launching driverless services of various kinds in various cities in 2026.

But always with lidar sensors. For me to be fully vindicated in my original prediction, Tesla will have to cave on their insistence that unsupervised self-driving is possible with only cameras. I mean, of course it’s possible, since humans do it with two measly eyeballs, and presumably the camera-only tech can eventually get there. Just that for every other manufacturer, they don’t think robot drivers are safe enough without superhuman sensors.

Which is the other possibility for how Tesla pulled off their robotaxi launch (and delivery of a single car to a customer with no one in the vehicle): They’re yolo’ing it, keeping the total autonomous miles low enough that luck is on their side while they finish figuring out actual level 4 autonomy.

ADDED: Take this with a grain of salt since I just had my robominions magically generate it, but here’s what Waymo vs Tesla plausibly looks like, counting only rides with an empty driver’s seat:

Superhuman math update

This is another favorite topic of mine, as I’ve gradually come to terms with the possibility that, even before AGI, AI might crush humans at math problem-solving like they crush us at chess and go. People used to think that it would take AGI to beat grandmasters at chess. Later people (including me!) thought it at least plausible that it would take AGI to beat grandmasters at go. I still think it’s plausible that expert-level math problem-solving (not to say the rest of a mathematician’s job, like knowing the right questions to ask) is AGI complete. But the probability keeps dropping.

This week, Terence Tao offers the following visualization for a set of 67 math optimization problems. Roughly speaking, green means superhuman performance, red is subhuman (where the humans in question are the best we’ve got), blue means the AI briefly pushed the frontier but humans got back on top, and gray is either parity with the best humans or indeterminate.

Terence Tao is arguably the top living mathematician and has been taking this seriously for years now. He’s not falling for hype. It’s safe to say that AI merely accelerating math and science research is the bear case. Anyone referring to AI as fancy autocomplete at this point is falling for the anti-hype. Which is not to say the whole industry isn’t way out over its skis, with a bubble due to pop.

Winning internet arguments with vibe-coding

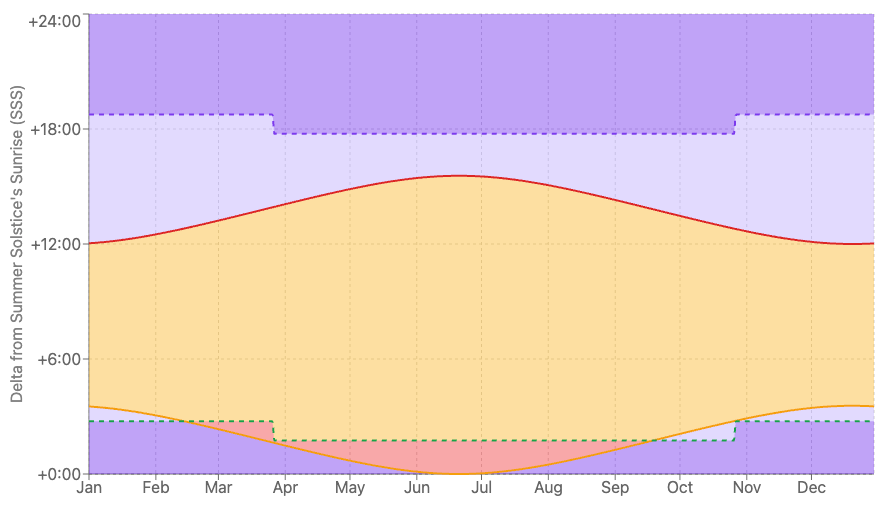

Just for fun, let me show you this nifty tool that Claude and Codebuff made for me:

It started with an internet argument, of course, about whether daylight savings time is good or not. I’m in favor so I wanted to demonstrate just how much daylight is saved by it. You can try the tool if you’re intrigued or scandalized. But I’m mentioning it here because I think it’s a good case study for the state of AI assistance for software development. I didn’t write any of the code for this thing, but I did spend (God this is embarrassing) 11 hours and 15 minutes (according to TagTime) coaxing the AI to make this thing work how I wanted it to. You can see my side of the endless dialog in the GitHub repo for it.

My favorite excerpt:

CLAUDE: This is actually quite tedious to get right for 50+ cities. Should I go through and carefully adjust each one, checking which cities observe DST and converting accordingly?

ME: you’re sure right that it sounds tedious. good thing you’re a computer!

CLAUDE: [does the thing]

(Notice that a bunch of the work it did — entirely on its own, to be clear — involved going out on the internet and looking up hundreds of facts about sunrise times and locations and DST rules for dozens of cities. Not always correctly. But it counts as progress on the prediction that AI agents will be useful by the end of this year. As of now it’s still pretty much a coin flip on Manifold.)

If you’re pessimistic about AGI, there’s plenty of fodder here to bolster your case. And vice versa. But what strikes me is how steadily it’s improving. It might plateau below human-level software engineers but I don’t see how you can have much confidence in that prediction. Being able to point to specific failures and mistakes humans wouldn’t have made is missing the point, I think. The improvement just isn’t slowing down. So the possibility that it shoots past human level is real.

The camera-only vs lidar debate is realy the crux of it. Tesla's insistance on vision-only might be ideologcal at this point, but the scaling problem you mention is key. If they can't get past that dozen-car fleet without safety monitors, the whole robotaxi thesis falls apart regardless of sensor choice.

“Musk promises…”

You should put promises in quotes…